This article was originally published in the Huffington Post.

For a long time now, scientists were held in thrall by publishers. They worked voluntarily – without getting any pay – as editors and reviewers for the publishers, and they allowed their research to be published in scientific journals without receiving anything out of it. No wonder that scientific publishing had been considered a lucrative business.

Well, that’s no longer the case. Now, scientific publishers are struggling to maintain their stranglehold over scientists. If they succeed, science and the pace of progress will take a hit. Luckily, the entire scientific landscape is turning on them – but a little support from the public will go a long way in ensuring the eventual downfall of an institute that is no longer relevant or useful for society.

To understand why things are changing, we need to look back in history to 1665, when the British Royal Society began publishing research results in a journal form called Philosophical Transactions of the Royal Society. Since the number of pages available in each issue was limited, the editors could only pick the most interesting and credible papers to appear in the journal. As a result, scientists from all over Britain fought to have their research published in the journal, and any scientist whose research was published in an issue gained immediate recognition throughout Britain. Scientists were even willing to become editors for scientific journals, since that was a position that demanded request – and provided them power to push their views and agendas in science.

Thus was the deal struck between scientific publishers and scientists: the journals provided a platform for the scientists to present their research, and the scientists fought tooth and nail to have their papers accepted into the journals – often paying from their pockets for it to happen. The journals publishers then had full copyrights over the papers, to ensure that the same paper would not be published in a competing journal.

That, at least, was the old way for publishing scientific research. The reason that the journal publishers were so successful in the 20th century was that they acted as aggregators and selectors of knowledge. They employed the best scientists in the world as editors (almost always for free) to select the best papers, and they aggregated together all the necessary publishing processes in one place.

And then the internet appeared, along with a host of other automated processes that let every scientist publish and disseminate a new paper with minimal effort. Suddenly, publishing a new scientific paper and making the scientific community aware of it, could have a radical new price tag: it could be completely free.

Free Science

Let’s go through the process of publishing a research paper, and see how easy and effortless it became:

- The scientist sends the paper to the journal: Can now be conducted easily through the internet, with no cost for mail delivery.

- The paper is rerouted to the editor dealing with the paper’s topic: This is done automatically, since the authors specify certain keywords which make sure the right editor gets the paper automatically to her e-mail. Since the editor is actually a scientist volunteering to do the work for the publisher, there’s no cost attached anyway. Neither is there need for a human secretary to spend time and effort on cataloguing papers and sending them to editors manually.

- The editor sends the paper to specific scientific reviewers: All the reviewers are working for free, so the publishers don’t spend any money there either.

Let’s assume that the paper was confirmed, and is going to appear in the journal. Now the publisher must:

- Paginate, proofread, typeset, and ensure the use of proper graphics in the paper: These tasks are now performed nearly automatically using word processing programs, and are usually handled by the original authors of the paper.

- Print and distribute the journal: This is the only step that costs actual money by necessity, since it is performed in the physical world, and atoms are notoriously more expensive than bits. But do we even need this step anymore? I have been walking around in the corridors of the academy for more than ten years, and I’ve yet to see a scientist with his nose buried in a printed journal. Instead, scientists are reading the papers on their computer screens, or printing them in their offices. The mass-printed version is almost completely redundant. There is simply no need for it.

In conclusion, it’s easy to see that while the publishers served an important role in science a few decades ago, they are just not necessary today. The above steps can easily be conducted by community-managed sites like Arxive, and even the selection process of high quality papers can be performed today by the scientist themselves, in forums like Faculty of 1000.

The publishers have become redundant. But worse than that: they are damaging the progress of science and technology.

The New Producers of Knowledge

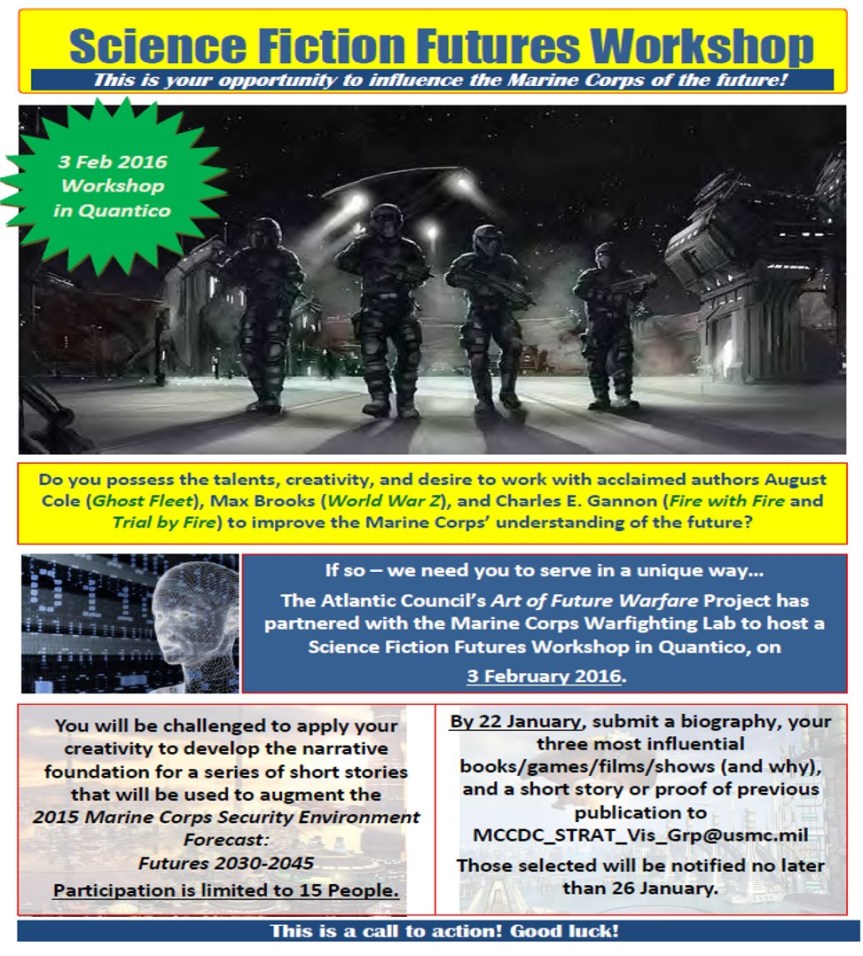

In a few years from now, the producers of knowledge will not be human scientists but computer programs and algorithms. Programs like IBM’s Watson will skim through hundreds of thousands of research papers and derive new meanings and insights from them. This would be an entirely new field of scientific research: retrospective research.

Computerized retrospective research is happening right now. A new model in developmental biology, for example, was discovered by an artificial intelligence engine that went over just 16 experiments published in the past. Imagine what would happen when AI algorithms cross and match together thousands papers from different disciplines, and come up with new theories and models that are supported by the research of thousands of scientists from the past!

For that to happen, however, the programs need to be able to go over the vast number of research papers out there, most of which are copyrighted, and held in the hands of the publishers.

You may say this is not a real problem. After all, IBM and other large data companies can easily cover the millions of dollars which the publishers will demand annually for access to the scientific content. What will the academic researchers do, though? Many of them do not enjoy the backing of the big industry, and will not have access to scientific data from the past. Even top academic institutes like Harvard University find themselves hard-pressed to cover the annual costs demanded by the publishers for accessing papers from the past.

Many ventures for using this data are based on the assumption that information is essentially free. We know that Google is wary of uploading scanned books from the last few decades, even if these books are no longer in circulation. Google doesn’t want to be sued by the copyrights holders – and thus is waiting for the copyrights to expire before it uploads the entire book – and lets the public enjoy it for free. So many free projects could be conducted to derive scientific insights from literally millions of research papers from the past. Are we really going to wait for nearly a hundred years before we can use all that knowledge? Knowledge, I should mention, that was gathered by scientists funded by the public – and should thus remain in the hands of the public.

What Can We Do?

Scientific publishers are slowly dying, while free publication and open access to papers are becoming the norm. The process of transition, though, is going to take a long time still, and provides no easy and immediate solution for all those millions of research papers from the last century. What can we do about them?

Here’s one proposal. It’s radical, but it highlights one possible way of action: have the government, or an international coalition of governments, purchase the copyrights for all copyrighted scientific papers, and open them to the public. The venture will cost a few billion dollars, true, but it will only have to occur once for the entire scientific publishing field to change its face. It will set to right the ancient wrong of hiding research under paywalls. That wrong was necessary in the past when we needed the publishers, but now there is simply no justification for it. Most importantly, this move will mean that science can accelerate its pace by easily relying on the roots cultivated by past generations of scientists.

If governments don’t do that, the public will. Already we see the rise of websites like Sci-Hub, which provide free (i.e. pirated) access to more than 47 million research papers. Having been persecuted by both the publishers and the government, Sci-Hub has just recently been forced to move to the Darknet, which is the dark and anonymous section of the internet. Scientists who will want to browse through past research results – that were almost entirely paid for by the public – will thus have to move over to the Darknet, which is where weapon smugglers, pedophiles and drug dealers lurk today. That’s a sad turn of events that should make you think. Just be careful not to sell your thoughts to the scholarly publishers, or they may never see the light of day.

—

Dr Roey Tzezana is a senior analyst at Wikistrat, an academic manager of foresight courses at Tel Aviv University, blogger at Curating The Future, the director of the Simpolitix project for political forecasting, and founder of TeleBuddy.