Welcome to the world without secrets.

We’ve all known for decades that politicians have used tax shelters for money laundering purposes, to avoid paying tax in their countries, and to avoid being identified with companies they were affiliated with in the past. Now the cat is out of the sack, with a new leak called The Panama Papers.

The Panama Papers contain about 11.5 million highly confidential documents that have detailed information about the dealings of more than 214,000 offshore companies. Such companies are most often used for money laundering and for obscuring connections between assets and their owners. Offshore companies are particularly useful to politicians, many of whom are required to declare their interests and investments in companies, and are usually required by law to forego any such relations in order to prevent corruption.

It doesn’t look like that law is working too well.

BBC News covered the initial revealings from the Panama Papers in the following words –

“The documents show 12 current or former heads of state and at least 60 people linked to current or former world leaders in the data. They include the Icelandic Prime Minister, Sigmundur Gunnlaugson, who had an undeclared interest linked to his wife’s wealth and is now facing calls for his resignation. The files also reveal a suspected billion-dollar money laundering ring involving close associates of Russian President Vladimir Putin.”

According to Aamna Mohdin, the Panama Papers event is the largest leak to date by a fair margin. The source, whoever that is, sent more than 2.6 terabytes of information from the Panama based company Mossack Fonseca.

What lessons can we derive from the Panama Papers event?

Journalism vs. Government

The leaked documents have been passed directly to one of Germany’s leading newspapers, Süddeutsche Zeitung, which shared them with the International Consortium of Investigative Journalists (ICIJ). Altogether, 109 media organizations in 76 countries have been analyzing the documents over the last year.

This state of affairs begs the question: why weren’t national and international police forces involved in the investigation? The answer seems obvious: the ICIJ had good reason to believe that suspected heads of states would nip such investigation in the bud, and also alert Mossack Fonseca and its clients to the existence of the leak. In other words, journalists in 76 countries worked in secrecy under the noses of their governments, despite the fact that those very governments were supposed to help prevent international tax crimes.

Artificial Intelligence can Help Fight Corruption

The amount of leaked documents is massive. No other word for it. As Wikipedia details –

The leak comprises 4,804,618 emails, 3,047,306 database format files, 2,154,264 PDFs, 1,117,026 images, 320,166 text files, and 2,242 files in other formats.

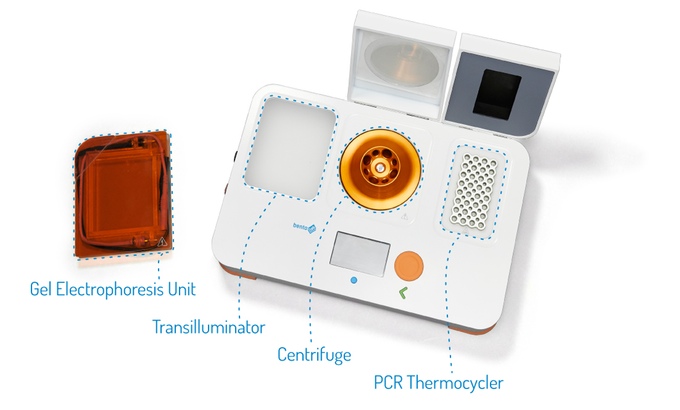

All of this data had to be indexed so that human beings could go through it and make sense of it. To do that, the documents were processed using optical character recognition systems that made the data machine-readable. Once the texts were searchable, the indexing was essentially performed automatically, with cross-matching of important persons with the relevant data.

Could this investigative act have been performed without having state of the art computers and optical character recognition systems? Probably, but it would’ve required a small army of analysts and investigators, and would’ve made the challenge practically impossible for anything less than a governmental authority to look into the matter. The advance in computer sciences has opened the road for the public (in this case spearheaded by the International Journalists Consortium) to investigate the rich and the powerful.

Where’s the Missing Information?

So far, there has been no mention of any American politician with offshore connections to Mossack Fonseca. Does that mean the American politicians are all pure and incorruptible? That doesn’t seem very likely. An alternative hypothesis, proposed on EoinHiggins.com, is that certain geopolitical conditions have made it difficult for Americans to use Panama as a tax shelter. But don’t you worry, folks: other financial firms in several countries are working diligently to provide tax shelters for Americans too.

These tax shelters have always drawn some of the ire of the public, but never in a major way. The public in many countries has never realized just how much of its tax money was being robbed away for the benefit of those who could afford to pay for such services. As things become clearer now and public outrage begins to erupt, it is quite possible that governmental investigators will have to focus more of their attention on other tax shelter businesses. If that happens, we’ll probably see further revelations on many, many other politicians in upcoming years.

Which leads us to the last lesson learned: we’re going into…

A World without Secrets

Wikileaks has demonstrated that there can no longer be secrets in any dealings between diplomats. The Panama Papers show that financial dealings are just as vulnerable. The nature of the problem is simple: when information was stored on paper, leaks were limited in size according to the amount of physical documents that could be whisked away. In the digital world, however, terabytes of information containing millions of documents can be carried in a single external hard drive hidden in one’s pocket, or uploaded online in a matter of hours. Therefore, just one disgruntled employee out of thousands can leak away all of a company’s information.

Can any information be kept secret under these conditions?

The issue becomes even more complicated when you realize that the Mossack Fonseca leaker seems to have acted without any monetary incentive. Maybe he just wanted the information to reach the public, or to take revenge on Mossack Fonseca. Either way, consider how many others will do the same for money or because they’re being blackmailed by other countries. Can you actually believe that the Chinese have no spies in the American Department of Defense, or vice versa? Do you really think that the leaks we’ve heard about are the only ones that have happened?

Conclusions

The world is rapidly being shaken free of secrets. The Panama Papers event is just one more link in the chain that leads from a world where almost everything was kept in the dark, to a world where everything is known to everyone.

If you think this statement is an exaggeration, consider that in the last five years alone, data breaches at Sony, Anthem, and eBay resulted in the information of more than 300 million customers being exposed to the world. When most of us hear about data breaches like these ones, we’re only concerned about whether our passwords have found their way into hackers’ hands. We tend to forget that the hackers – and anyone who buys the information from them for a dime – also obtain our names, family relations, places of residence, and details about our lives in general and what makes us tick. If you still believe that any information you have online (and maybe on your computer as well) can be kept secret for long, you’re almost certainly fooling yourself.

As the Panama Papers incident shows, this forced transparency does not necessarily have to be a bad thing. It helps us see things for what they are, and understand how the rich and powerful operate. Now the choice is left to us: will we try to go back to a world where everything is hidden away, and tell ourselves beautiful stories about our honest leaders – or will we accept reality and create and enforce better laws to combat tax shelters?